Updated June 2017 : reflecting move to certbot/certbot container.

I ran into an issue this week with my StartSSL certificates deployed on my personal lab/ infrastructure. It turns out the Google stopped trusting this CA with a recent release of Chrome, and that this had been on the cards for a while: https://security.googleblog.com/2016/10/distrusting-wosign-and-startcom.html

So, with this in mind, I decided to make the move to Let’s Encrypt.

My Environment

- Ubuntu Server 16.04

- Docker containers for:

- Nginx (used as a reverse proxy) configured to redirect all HTTP traffic to HTTPS

- A test website published at: test.cb-net.co.uk

- A Guacamole instance, published at: remote.cb-net.co.uk

The fact that I was using docker containers would make this little more “interesting” or challenging.

Using Let’s Encrypt Certificates in a Docker Container

I came across the following post which I used as a foundation for the method below: https://manas.tech/blog/2016/01/25/letsencrypt-certificate-auto-renewal-in-docker-powered-nginx-reverse-proxy.html

Much is common in terms of the solution/ scripts.

NGINX Container/ Config

NGINX volumes passed-through to container from the docker host (you’ll use these later):

- Config folder: /var/docker/volumes/nginx/conf.d

- SSL certificate root:/var/docker/volumes/nginx/ssl

- WWW root folder: /var/docker/volumes/nginx/www/ : Create a folder per domain – i.e.

- /var/docker/volumes/nginx/www/test.cb-net.co.uk

- /var/docker/volumes/nginx/www/remote.cb-net.co.uk

Create the directory structure on your docker host above (change domains to match your needs):

sudo mkdir -p /var/docker/volumes/nginx/conf.d

sudo mkdir -p /var/docker/volumes/nginx/www/test.cb-net.co.uk

sudo mkdir -p /var/docker/volumes/nginx/www/remote.cb-net.co.uk

sudo mkdir -p /var/docker/volumes/nginx/ssl

Now, re-create the NGINX container to include the config, root and the SSL folders:

sudo docker pull nginx

sudo docker run --name nginx -p 80:80 -p 443:443 \

-v /var/docker/volumes/nginx/ssl/:/etc/nginx/ssl/ \

-v /var/docker/volumes/nginx/conf.d/:/etc/nginx/conf.d/ \

-v /var/docker/volumes/nginx/www/:/var/www \

-d nginx

Modifying your HTTP to HTTPS Redirect Config

Skip this section if you have a new NGINX container/ no SSL in-place today.

Leaving a redirect all to HTTPS configuration in place will cause the Let’s Encrypt certificate request to fail (specifically the domain validation piece).

You need to modify the NGINX configuration to create a root folder, per domain, that Let’s Encrypt will use for domain validation. All other traffic will be redirected to HTTPS.

You’ll need to do this for each published site/ resource.

# Redirect http to https

server {

listen 80;

server_name test.cb-net.co.uk;

#### Required for letsencrypt domain validation to work

location /.well-known/ {

root /var/www/test.cb-net.co.uk/;

}

return 301 https://$server_name$request_uri;

}

Ensure you allow port 80 traffic to hit your web server for the request to work.

Requesting the Certificate

We’ll use a docker image for this piece as well.

You can see below, I specify the SSL folder we created and mapped into the NGINX container:

- /var/docker/volumes/nginx/ssl

Be sure to change the domain name, web root path and email address used in the request.

# Pull the docker image

sudo docker pull certbot/certbot

# Request the certificates - note one per published site

sudo docker run -it --rm --name letsencrypt \

-v "/var/docker/volumes/nginx/ssl:/etc/letsencrypt" \

--volumes-from nginx \

certbot/certbot \

certonly \

--webroot \

--webroot-path /var/www/test.cb-net.co.uk \

--agree-tos \

--renew-by-default \

-d test.cb-net.co.uk \

-m [email protected]

sudo docker run -it --rm --name letsencrypt \

-v "/var/docker/volumes/nginx/ssl:/etc/letsencrypt" \

--volumes-from nginx \

certbot/certbot \

certonly \

--webroot \

--webroot-path /var/www/remote.cb-net.co.uk \

--agree-tos \

--renew-by-default \

-d remote.cb-net.co.uk \

-m [email protected]

If successful, the new certificate files will be saved to: /var/docker/volumes/nginx/ssl/live/<domain name>

You will find four files in each domain folder:

- cert.pem: Your domain’s certificate

- chain.pem: The Let’s Encrypt chain certificate

- fullchain.pem: cert.pem and chain.pem combined

- privkey.pem: Your certificate’s private key

Pulling it all Together

We now need to configure NGINX to use these certificates, modify your config file as below, adding a new location to both HTTP and HTTPS listeners – these lines will need to be set for each published resource/ certificate as requested above, within the relevant server definition in your NGINX configuration file.

I have only included a single server definition in the config file example below, you can simply copy/ paste to create additional published resources/ modify as necessary.

# Redirect http to https

server {

listen 80;

server_name remote.cb-net.co.uk;

#### Required for letsencrypt domain validation to work

location /.well-known/ {

root /var/www/remote.cb-net.co.uk/;

}

return 301 https://$server_name$request_uri;

}

# Guacamole Reverse Proxy HTTPS Server

server {

listen 443 ssl;

server_name remote.cb-net.co.uk;

rewrite_log on;

ssl_certificate /etc/nginx/ssl/live/remote.cb-net.co.uk/fullchain.pem;

ssl_certificate_key /etc/nginx/ssl/live/remote.cb-net.co.uk/privkey.pem;

ssl_trusted_certificate /etc/nginx/ssl/live/remote.cb-net.co.uk/fullchain.pem;

#### Required for letsencrypt domain validation to work

location /.well-known/ {

root /var/www/remote.cb-net.co.uk/;

}

# Only needed for guacamole

location / {

proxy_pass http://<guacamole instance>:8080/guacamole/;

proxy_redirect off;

proxy_buffering off;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection $http_connection;

proxy_cookie_path /guacamole/ /;

access_log off;

}

}

# Create additional server blocks for other published websites.

Once modified/ saved, restart the nginx instance:

sudo docker restart nginx

Automating the Renewal

These certificates will only last 90 days, so automating renewal is key!

Create the script below as /etc/cron.monthly/letsencrypt-renew.sh

#!/bin/sh

# Pull the latest version of the docker image

docker pull quay.io/letsencrypt/letsencrypt

# Change domain name to meet your requirement

docker run -it --rm --name letsencrypt \

-v "/var/docker/volumes/nginx/ssl:/etc/letsencrypt" \

--volumes-from nginx \

certbot/certbot \

certonly \

--webroot \

--webroot-path /var/www/test.cb-net.co.uk \

--agree-tos \

--renew-by-default \

-d test.cb-net.co.uk \

-m [email protected]

# Change domain name to meet your requirement

docker run -it --rm --name letsencrypt \

-v "/var/docker/volumes/nginx/ssl:/etc/letsencrypt" \

--volumes-from nginx \

certbot/certbot \

certonly \

--webroot \

--webroot-path /var/www/remote.cb-net.co.uk \

--agree-tos \

--renew-by-default \

-d remote.cb-net.co.uk \

-m [email protected]

# Chnage "nginx" to the nginx container instance

docker kill --signal=HUP nginx

Now enable execute permissions on the script:

chmod + x /etc/cron.monthly/letsencrypt-renew.sh

Finally, you can test the script:

./etc/cron.monthly/letsencrypt-renew.sh

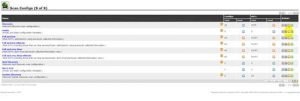

Once executed, your published sites should reflect a certificate with a created time stamp of just a few seconds after running the script.

![]()

![]()

![]()